Mastering GPUs: A Beginner’s Guide to GPU-Accelerated DataFrames in Python

RAPIDS cuDF, with its pandas-like API, enables data scientists and engineers to quickly tap into the immense potential of parallel computing on GPUs–with just a few code line changes. Read on for more.

If you’re working in python with large datasets, perhaps several gigabytes in size, you can likely relate to the frustration of waiting hours for your queries to finish as your CPU-based pandas DataFrame struggles to perform operations. This exact situation is where a pandas user should consider leveraging the power of GPUs for data processing with RAPIDS cuDF.

RAPIDS cuDF, with its pandas-like API, enables data scientists and engineers to quickly tap into the immense potential of parallel computing on GPUs–with just a few code line changes.

If you’re unfamiliar with GPU acceleration, this post is an easy introduction to the RAPIDS ecosystem and showcases the most common functionality of cuDF, the GPU-based pandas DataFrame counterpart.

Want a handy summary of these tips? Follow along with the downloadable cuDF cheat sheet.

Leveraging GPUs with cuDF DataFrame

cuDF is a data science building block for the RAPIDS suite of GPU-accelerated libraries. It is an EDA workhorse you can use to build allowing data pipelines to process data and derive new features. As a fundamental component within the RAPIDS suite, cuDF underpins the other libraries, solidifying its role as a common building block. Like all components in the RAPIDS suite, cuDF employs the CUDA backend to power GPU computations.

However, with an easy and familiar Python interface, cuDF users don't need to interact directly with that layer.

How cuDF Can Make Your Data Science Work Faster

Are you tired of watching the clock while your script runs? Whether you're handling string data or working with time series, there are many ways you can use cuDF to drive your data work forward.

- Time series analysis: Whether you're resampling data, extracting features, or conducting complex computations, cuDF offers a substantial speed-up, potentially up to 880x faster than pandas for time-series analysis.

- Real-time exploratory data analysis (EDA): Browsing through large datasets can be a chore with traditional tools, but cuDF's GPU-accelerated processing power makes real-time exploration of even the biggest data sets possible

- Machine learning (ML) data preparation: Speed up data transformation tasks and prepare your data for commonly used ML algorithms, such as regression, classification and clustering, with cuDF's acceleration capabilities. Efficient processing means quicker model development and allows you to get towards the deployment quicker.

- Large-scale data visualization: Whether you're creating heat maps for geographic data or visualizing complex financial trends, developers can deploy data visualization libraries with high-performance and high-FPS data visualization by using cuDF and cuxfilter. This integration allows for real-time interactivity to become a vital component of your analytics cycle.

- Large-scale data filtering and transformation: For large datasets exceeding several gigabytes, you can perform filtering and transformation tasks using cuDF in a fraction of the time it takes with pandas.

- String data processing: Traditionally, string data processing has been a challenging and slow task due to the complex nature of textual data. These operations are made effortless with GPU-acceleration

- GroupBy operations: GroupBy operations are a staple in data analysis but can be resource-intensive. cuDF speeds up these tasks significantly, allowing you to gain insights faster when splitting and aggregating your data

Familiar interface for GPU processing

The core premise of RAPIDS is to provide a familiar user experience to popular data science tools so that the power of NVIDIA GPUs is easily accessible for all practitioners. Whether you’re performing ETL, building ML models, or processing graphs, if you know pandas, NumPy, scikit-learn or NetworkX, you will feel at home when using RAPIDS.

Switching from CPU to GPU Data Science stack has never been easier: with as little change as importing cuDF instead of pandas, you can harness the enormous power of NVIDIA GPUs, speeding up the workloads 10-100x (on the low end), and enjoying more productivity — all while using your favorite tools.

Check the sample code below that presents how familiar cuDF API is to anyone using pandas.

import pandas as pd

import cudf

df_cpu = pd.read_csv('/data/sample.csv')

df_gpu = cudf.read_csv('/data/sample.csv')

Loading data from your favorite data sources

Reading and writing capabilities of cuDF have grown significantly since the first release of RAPIDS in October 2018. The data can be local to a machine, stored in an on-prem cluster, or in the cloud. cuDF uses fsspec library to abstract most of the file-system related tasks so you can focus on what matters the most: creating features and building your model.

Thanks to fsspec reading data from either local or cloud file system requires only providing credentials to the latter. The example below reads the same file from two different locations,

import cudf

df_local = cudf.read_csv('/data/sample.csv')

df_remote = cudf.read_csv(

's3://<bucket>/sample.csv'

, storage_options = {'anon': True})

cuDF supports multiple file formats: text-based formats like CSV/TSV or JSON, columnar-oriented formats like Parquet or ORC, or row-oriented formats like Avro. In terms of file system support, cuDF can read files from local file system, cloud providers like AWS S3, Google GS, or Azure Blob/Data Lake, on- or off-prem Hadoop Files Systems, and also directly from HTTP or (S)FTP web servers, Dropbox or Google Drive, or Jupyter File System.

Creating and saving DataFrames with ease

Reading files is not the only way to create cuDF DataFrames. In fact, there are at least 4 ways to do so:

From a list of values you can create DataFrame with one column,

cudf.DataFrame([1,2,3,4], columns=['foo'])

Passing a dictionary if you want to create a DataFrame with multiple columns,

cudf.DataFrame({

'foo': [1,2,3,4]

, 'bar': ['a','b','c',None]

})

Creating an empty DataFrame and assigning to columns,

df_sample = cudf.DataFrame()

df_sample['foo'] = [1,2,3,4]

df_sample['bar'] = ['a','b','c',None]

Passing a list of tuples,

cudf.DataFrame([

(1, 'a')

, (2, 'b')

, (3, 'c')

, (4, None)

], columns=['ints', 'strings'])

You can also convert to and from other memory representations:

- From an internal GPU matrix represented as an DeviceNDArray,

- Through DLPack memory objects used to share tensors between deep learning frameworks and Apache Arrow format that facilitates a much more convenient way of manipulating memory objects from various programming languages,

- To converting to and from pandas DataFrames and Series.

In addition, cuDF supports saving the data stored in a DataFrame into multiple formats and file systems. In fact, cuDF can store data in all the formats it can read.

All of these capabilities make it possible to get up and running quickly no matter what your task is or where your data lives.

Extracting, transforming, and summarizing data

The fundamental data science task, and the one that all data scientists complain about, is cleaning, featurizing and getting familiar with the dataset. We spend 80% of our time doing that. Why does it take so much time?

One of the reasons is because the questions we ask the dataset take too long to answer. Anyone who has tried to read and process a 2GB dataset on a CPU knows what we’re talking about.

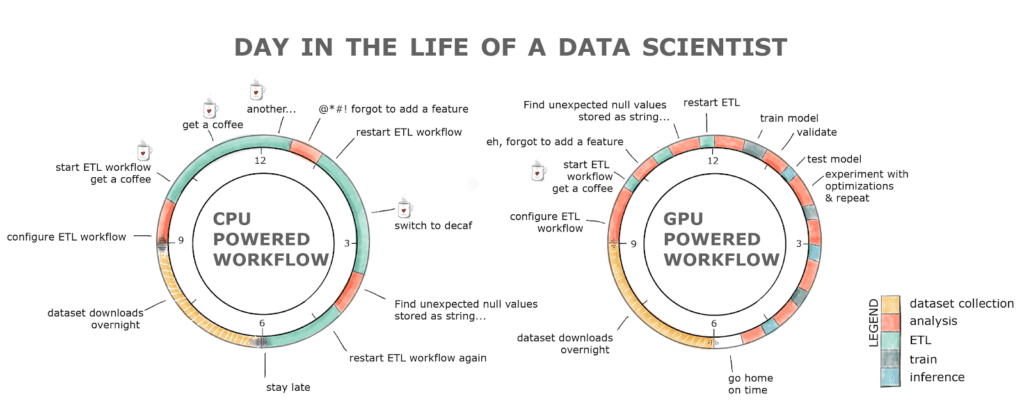

Additionally, since we’re human and we make mistakes, rerunning a pipeline might quickly turn into a full day exercise. This results in lost productivity and, likely, a coffee addiction if we take a look at the chart below.

Figure 1. Typical workday for a developer using a GPU- vs. CPU-powered workflow

RAPIDS with the GPU-powered workflow alleviates all these hurdles. The ETL stage is normally anywhere between 8-20x faster, so loading that 2GB dataset takes seconds compared to minutes on a CPU, cleaning and transforming the data is also orders of magnitude faster! All this with a familiar interface and minimal code changes.

Working with strings and dates on GPUs

No more than 5 years ago working with strings and dates on GPUs was considered almost impossible and beyond the reach of low-level programming languages like CUDA. After all, GPUs were designed to process graphics, that is, to manipulate large arrays and matrices of ints and floats, not strings or dates.

RAPIDS allows you to not only read strings into the GPU memory, but also extract features, process, and manipulate them. If you are familiar with Regex then extracting useful information from a document on a GPU is now a trivial task thanks to cuDF. For example, if you want to find and extract all the words in your document that match the [a-z]*flow pattern (like, dataflow, workflow, or flow) all you need to do is,

df['string'].str.findall('([a-z]*flow)')

Extracting useful features from dates or querying the data for a specific period of time has become easier and faster thanks to RAPIDS as well.

dt_to = dt.datetime.strptime("2020-10-03", "%Y-%m-%d")

df.query('dttm <= @dt_to')

Empowering Pandas Users with GPU-acceleration

The transition from a CPU to a GPU data science stack is straightforward with RAPIDS. Importing cuDF instead of pandas is a small change that can deliver immense benefits. Whether you're working on a local GPU box or scaling up to full-fledged data centers, the GPU-accelerated power of RAPIDS provides 10-100x speed improvements (at the low end). This not only leads to increased productivity but also allows for efficient utilization of your favorite tools, even in the most demanding, large-scale scenarios.

RAPIDS has truly revolutionized the landscape of data processing, enabling data scientists to complete tasks in minutes that once took hours or even days, leading to increased productivity and lower overall costs.

To get started on applying these techniques to your dataset, read the accelerated data analytics series on NVIDIA Technical Blog.

Editor’s Note: This post was updated with permission and originally adapted from the NVIDIA Technical Blog.